How Shadow AI Leads to Infrastructure Debt

Organizations are repeating a familiar mistake with AI. And this time, the consequences are arriving faster and costing more.

In April 2023, Samsung Electronics discovered that engineers had been pasting proprietary code into ChatGPT. Over the course of one month, three separate data leaks exposed semiconductor equipment source code, internal meeting notes, and chip optimization sequences.

The breach was permanent. Once code enters ChatGPT’s training data, it potentially becomes accessible to the system’s 100 million monthly users. Samsung couldn’t quantify the damage because they couldn’t measure it. How do you value trade secrets that now exist in a system you can’t audit? How do you calculate competitive advantage lost when your chip optimization approaches might be reconstructable by competitors using the same AI tools? The semiconductor industry operates on razor-thin margins where even small technical edges translate to market share worth billions.

Samsung responded with a company-wide ban on generative AI tools, a decision that immediately put them at a competitive disadvantage against rivals who were integrating AI more carefully. Surveys later showed that 65% of Samsung employees understood the security risks but felt productivity pressures justified using the tools anyway.

This incident, widely reported in technology media at the time, illustrates a pattern now playing out across industries. AI tools are cheap and contagious.

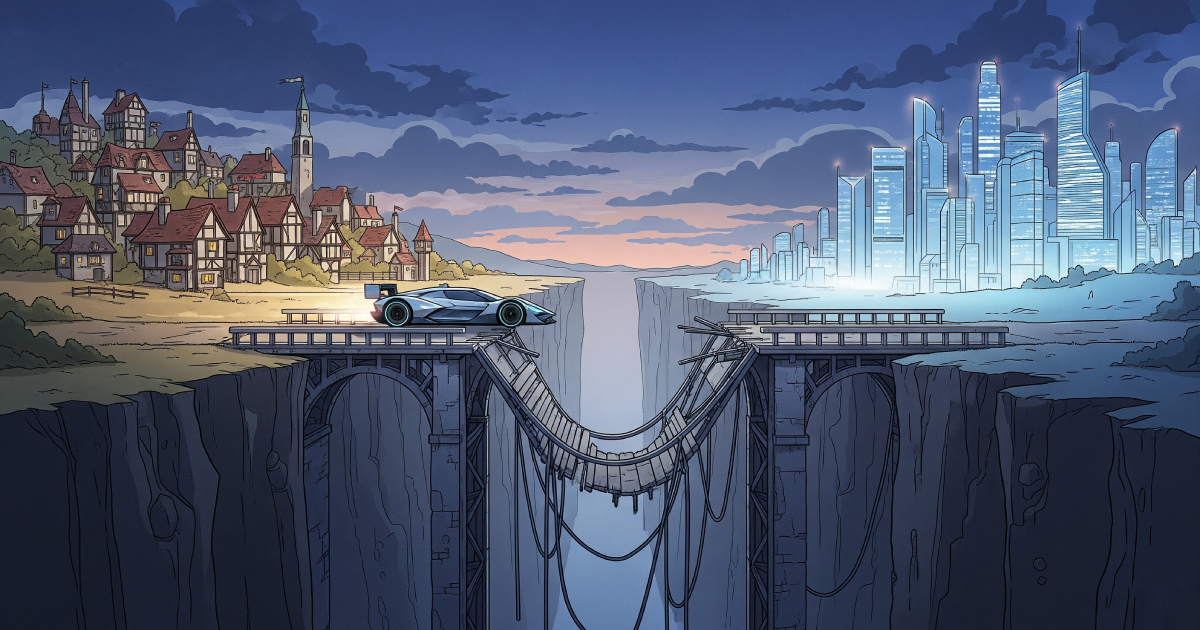

It’s a pattern that’s been visible for decades, one that executives should recognize from previous technology waves: tools get adopted faster than the organizational infrastructure needed to govern, enable, and optimize them. The result is infrastructure debt, fast-moving, expensive, and often irreversible.

With cloud computing, companies that simply moved infrastructure without redesigning architecture saw limited returns. With Agile, organizations that adopted standups and sprints without changing roles and decision-making structures failed to capture the promised velocity. Now with AI, the same pattern is emerging. But this time, the consequences are arriving faster and costing more.

The Predictable Pattern of Infrastructure Debt

The technology industry has a short memory. Major platform shifts typically follow a remarkably similar arc: initial enthusiasm and rapid tool adoption, followed by escalating disorder as the organization discovers it lacks the infrastructure to manage what it’s deployed, then a painful period of reactive remediation, and finally, for survivors, belated value capture once proper infrastructure is in place.

W. Edwards Deming famously observed that “a bad system will beat a good person every time.” Deming’s work on quality and systems thinking, which transformed manufacturing in the 1950s and later influenced software development, explains why technology adoption without system transformation consistently fails: no amount of individual competence can overcome structural constraints.

Consider the cloud migration wave of the 2010s. Organizations saw Amazon and Netflix reaping enormous benefits from cloud infrastructure and rushed to follow. Many executives treated cloud as a simple “lift and shift” opportunity. They simply moved existing applications to AWS or Azure. The technology worked, but without intent, the business cases was not better served than before.

The Agile and DevOps movements followed an identical pattern. Organizations that adopted the ceremonies (daily standups, two-week sprints, retrospectives) without changing decision-making structures, feedback loops, and team boundaries found that Agile couldn’t overcome organizational constraints. The planning meetings happened on schedule, but decisions still required six layers of approval. The sprints ran like clockwork, but deployment still took three months because the release process hadn’t changed.

This isn’t mysterious. As the Theory of Constraints teaches, every system has a limiting factor that governs how much value it can deliver. Making one part of the system faster doesn’t improve overall performance if the constraint lies elsewhere. When developers write code faster using AI, but that code still waits days for review, approval, and deployment, the system hasn’t accelerated. It’s just moved the bottleneck. In some cases, organizations have successfully addressed these downstream constraints and captured real productivity gains, but these represent exceptions rather than the norm.

The pattern is consistent enough to be predictable. Yet here we are again with AI, watching the same dynamic unfold. The difference this time is velocity: AI adoption is happening so rapidly that the infrastructure gap is creating problems at unprecedented speed and scale.

Why AI Makes the Infrastructure Gap Catastrophic

AI adoption differs from previous technology waves in three critical ways that make infrastructure debt far more dangerous: the speed of adoption creates no time for systematic preparation, the accessibility of tools enables universal shadow deployment, and the stakes involve intellectual property and regulatory compliance in ways that previous tools didn’t.

The adoption velocity is unprecedented. Within 18 months of ChatGPT’s public release, AI usage in software development reached 78%, with spending jumping six-fold from $2.3 billion to $13.8 billion (Stanford HAI AI Index, 2025). For context, cloud adoption took nearly a decade to reach similar penetration. This speed means organizations are discovering infrastructure gaps not over years but over weeks.

More concerning is the accessibility dynamic. Previous enterprise technologies (cloud infrastructure, continuous integration systems, container orchestration) required specialized knowledge and explicit provisioning. An individual developer couldn’t simply spin up a production-grade Kubernetes cluster on their personal credit card. AI tools have no such barriers. A $20 monthly subscription to Claude or ChatGPT gives any developer powerful capabilities with no IT involvement required. This lower barrier is a double edged sword. Lower barriers enable rapid innovation but create governance blindspots that persist until the first major incident.

And when an employee leave the company, their personal AI subscriptions and workflows go with them. What should be institutional capability becomes tribal knowledge that walks out the door: undocumented prompts, custom integrations, productivity shortcuts the organization doesn’t even know exist until someone’s absence exposes the gap. AI transform employee turnover from normal business friction into a business continuity risk.

This creates what we’re calling the shadow AI economy, and its scale is remarkable. While only 40% of companies report purchasing official enterprise AI subscriptions, over 90% of employees use AI tools regularly for work (State of AI in Business Report, 2025). This isn’t passive usage. Developers are integrating these tools into daily workflows, often multiple times per day, processing company code and data through systems the organization has no visibility into, no governance over, and no control of.

The consequences of this visibility gap extend far beyond the Samsung case. At financial institutions, CTOs report weekly production incidents from AI-generated code that received insufficient review. The CEO of code quality company Sonar relayed that a major bank was experiencing “an outage a week” because developers were scrutinizing AI-generated code less carefully than their own work, with the mentality “it’s not my code.” Each incident cost hundreds of thousands in remediation and damaged customer trust in ways that compounded over time.

IBM’s 2024 Cost of Data Breach Report quantifies the shadow AI premium: organizations experience $670,000 higher average breach costs when shadow AI is involved, with substantial increases in both detection time and remediation complexity.

Even in an official framework, companies rushing to deploy GenAI, often discover their data isn’t connected, their systems can’t scale, and they lack visibility into usage.

In Europe, the AI Act, implemented in 2024, makes these gaps regulatory violations, not just technical debt. Companies must demonstrate not just AI capability but AI governance: documenting data lineage, model decisions, and usage patterns.

This regulatory framework creates competitive dynamics: Companies that built proper infrastructure early can demonstrate compliance easily. Those playing catch-up face both technical remediation and regulatory exposure. The AI Act’s risk-based approach means infrastructure requirements scale with use case criticality. Healthcare AI analyzing patient data requires more rigorous governance than marketing AI generating ad copy. This tiered approach strengthen the idea that infrastructure requirements should match organizational context, not follow one-size-fits-all mandates.

The infrastructure gap isn’t just about cost, it’s about potential existential risk exposure that most executives don’t yet understand they’re carrying.

Infrastructure as the Difference Between Chaos and Capability

Platform engineering has emerged as the organizational response to infrastructure debt, though the term obscures what’s really happening. Platform engineering isn’t about technology. It’s about building organizational infrastructure that enables developers to move fast while preventing the shadow adoption and quality degradation that unmanaged AI creates.

The challenge is that platform engineering is legitimately difficult to implement successfully. Organizations that get it right see transformative results. Those that don’t often make things worse by adding overhead without adding value.

Spotify’s transformation from constraint to catalyst illustrates what success looks like when platform engineering is done well.

In 2018, Spotify’s machine learning efforts were fragmented across teams. Data scientists spent weeks setting up infrastructure before they could train a single model. Onboarding new ML engineers took 40-plus days. Mostly waiting for access to systems, navigating undocumented processes, and reconstructing tribal knowledge.

The company built ML Home, a centralized platform that made a strategic decision: treat internal developers as customers, not compliance risks. The platform collapsed infrastructure setup from weeks to hours. New engineers could go from first day to tenth pull request in under 20 days, a 55% reduction in onboarding time.

The results demonstrate what happens when platform teams focus on value delivery rather than mandates. ML Home reached 99% voluntary adoption. Not mandated, but chosen because it made work easier. Daily active users grew 200% in the first year. The platform now tracks more than 220 ML projects and enables productivity equivalent to three additional full-time engineers per ten developers.

The Spotify transformation reveals a broader pattern. Organizations that successfully build enabling infrastructure focus on solving real developer pain points, make official channels easier than shadow alternatives, and measure success through adoption and satisfaction rather than feature counts.

Before examining the Platform Engineering causes of failure, I should acknowledge my bias: I lead platform engineering. That gives me both deep expertise in what works and institutional blindness to alternatives. The evidence I present is real, but my interpretation favors a specific solution. Judge accordingly.

Why Platform Engineering Fails: The Organizational Design Problem

The uncomfortable truth that the platform engineering community rarely discusses is that most implementations fail. Not because of technical inadequacy, but because of organizational design mismatches that no amount of engineering can overcome.

A pattern emerges across my discussions with platform engineering leaders: the “build it and they will come” failure. In a typical trajectory, a 500-person enterprise software company invests $1-3M hiring experienced engineers from companies like Netflix or Google, deploying Kubernetes and modern observability tools, following best practices those engineers saw work at scale. Eighteen months later, adoption sits between 20-30%. The fundamental mistake in these cases: treating platform engineering as a technical challenge rather than a product challenge. Teams focus entirely on capabilities without validating whether those capabilities solve problems developers experience as painful. When companies conduct post-mortem user research, they consistently discover their developers’ actual pain points (database access, legacy code documentation, cross-team coordination) bear little resemblance to what the platform provides.

The second failure mode is the ticket-ops trap. Platform teams become vending machines, responding to queued requests without vision. They’re buried in one-off infrastructure requests, never building self-service capabilities. Both platform engineers and developers become frustrated. The former by endless toil, the latter by slow response times.

Research on AI pilot failures reveals similar organizational patterns. MIT’s study of generative AI initiatives showed 95% fail to reach production. The barriers are organizational: resistance to adopting new tools, quality concerns from poor integration, lack of executive sponsorship, and challenging change management.

There’s an instructive paradox here. The same knowledge workers who use ChatGPT daily for personal tasks describe enterprise AI tools as unreliable. They integrate consumer AI into their workflows seamlessly but resist official enterprise tools. The technology is identical, what differs is the organizational wrapper. Consumer tools learn from usage and adapt to needs. Enterprise tools, constrained by governance frameworks that prioritize control over experience, often can’t.

Alternative Approaches: When Platform Engineering Isn’t the Answer

Platform engineering represents one response to infrastructure debt, but it’s not the only viable approach and for many organizations, it’s not the right one. Three alternatives merit serious consideration:

- Accept and Optimize for Chaos. Some organizations, particularly smaller companies or those in fast-moving competitive landscapes, make an explicit strategic choice: move fast, accept shadow AI, and manage risk through other means. They invest in rapid incident response, comprehensive cyber insurance, and clear individual accountability rather than centralized governance. This works when speed-to-market outweighs risk exposure, when the organization is small enough that informal coordination suffices, or when the competitive landscape demands maximum velocity. The trade-off is understood and intentional: higher breach risk and potential compliance exposure in exchange for no governance friction.

- Buy Governance-as-a-Service. Rather than building internal platform capabilities, organizations can purchase specialized AI governance tools from vendors like WrangleAI, Harmonic Security, or enterprise vendors embedding AI governance into existing platforms. This approach makes sense for organizations under 100 developers who can’t justify dedicated platform staff, for companies with limited technical sophistication, or for those who need governance quickly without internal buildout. The limitation: these tools provide visibility and control but not enablement they tell you about shadow AI but don’t make official channels faster than shadow alternatives.

- Federated Accountability Without Central Platforms. Some organizations radically decentralize AI adoption, pushing governance responsibility to individual teams with clear accountability frameworks. Each team makes its own tool choices but bears full responsibility for security, compliance, and costs. This works in organizations with high engineering maturity, strong team-level leadership, and clear accountability mechanisms. The advantage: maximum autonomy and flexibility. The risk: inconsistency, duplicated effort, and governance gaps between teams.

Platform engineering makes sense when organizations meet specific conditions: they’re large enough to fund dedicated teams (typically 250+ developers), they face regulatory requirements demanding centralized governance, they have procurement leverage to negotiate better terms through consolidation, and they’re willing to invest 12-24 months before seeing returns. For organizations not meeting these criteria, alternative approaches may be more appropriate.

Now let’s be honest. most organizations reading this already have significant shadow AI adoption. You’re not building greenfield infrastructure you’re remediating existing chaos. That changes the equation significantly. Remediation requires different tactics than prevention: discovery tools to find what exists, migration strategies to move users from shadow to sanctioned tools, and change management to overcome resistance from people dependent on tools you’re replacing.

The scale question also matters more than platform engineering advocates (myself included) typically admit. A five-person startup and a 5,000-person enterprise both need governance, but the appropriate solutions differ dramatically. The startup implements governance through clear policies and a senior engineer spending 20% time on platform concerns. The enterprise needs dedicated teams. The principles remain consistent visibility, enablement, and governance but the implementation scales radically.

The Closing Window: Why Timing Matters

The infrastructure debt pattern suggests a question executives should be asking: when should we act?

The evidence indicates there’s a narrow window between recognizing the problem and it becoming prohibitively expensive to fix. Organizations that build infrastructure proactively, before the first major security incident, before shadow AI becomes deeply entrenched, before technical debt compounds, capture value earlier and avoid crisis-mode remediation costs.

That window is narrowing faster with AI than with previous technology waves. Cloud adoption was slow enough that organizations had years to react. Agile transformation could be piloted in small teams before scaling. AI adoption is happening so quickly that the gap between “we should probably do something” and “we’re in crisis mode” is measured in months, not years.

The data suggests three distinct scenarios based on timing:

- Organizations acting now (before major incidents) can build infrastructure deliberately, experiment with governance models, and establish practices while shadow AI is still manageable. They capture productivity gains while avoiding the breach premiums and compliance violations that come from unmanaged adoption. The investment is strategic, not reactive.

- Organizations waiting for the first incident face a different calculus. After Samsung’s leak or a major production failure, infrastructure investment becomes crisis response. The pressure to move fast creates shortcuts. Governance becomes heavy-handed rather than enabling because the focus is damage control, not value creation. Developers who’ve become dependent on shadow tools resist centralization. The technical debt has accumulated to the point where remediation costs multiply.

- Organizations that wait multiple years discover that shadow AI has become so embedded that centralization is practically impossible. Hundreds of tools are in use. Developers have built critical workflows around unapproved systems. Attempting to centralize triggers massive resistance because you’re taking away tools people depend on. Some organizations in this scenario simply accept permanent fragmentation and optimize for governance of chaos rather than governance of order.

The financial implications differ dramatically. Organizations building infrastructure proactively report 12-24 month payback periods with documented cost savings of 15-30%. Those responding to incidents spend comparable amounts but primarily avoid further losses rather than capturing gains. Those in permanent crisis mode pay premium costs indefinitely. The $670,000 breach premium becomes the recurring cost of doing business.

By making infrastructure investment the explicit prerequisite for expanded AI adoption you can get: real-time cost visibility deployed within 60 days, automated quality gates for AI-generated code within 90 days, self-service provisioning for approved tools within six months.

Cost observability caught the kind of issue platform teams frequently encounter: unauthorized GPU-intensive experiments, in one typical pattern. Potentially processing sensitive data through unapproved instances. By preventing a single incident that can become a compliance violation, the infrastructure investment pay for itself.

More importantly, the culture have to shift. Developers should be able to request AI tools through the platform team, which provisions them in hours with embedded governance. This way, adoption of official tools is rising as shadow usage declines. The platform doesn’t need to be perfect but to become the path of least resistance rather than the obstacle to avoid.

Beyond AI: The Infrastructure-First Imperative

The lesson from cloud, Agile, and AI adoption is clear: organizational infrastructure must evolve in lockstep with tool adoption, or the tools often create chaos rather than capability. This isn’t specific to AI. It’s a fundamental principle of technology transformation that executives tend to underestimate.

The pattern suggests a different approach to technology adoption. Instead of asking “What tools should we buy?” executives should ask “What organizational infrastructure do we need to capture value from these tools?” The investment decision isn’t whether to purchase GitHub Copilot or ChatGPT Enterprise, it’s whether to build the platform capabilities that make those tools productive rather than problematic.

This reframing has significant implications for how technology investments get evaluated. Traditional ROI calculations focus on tool costs versus productivity gains: “If developers get 30% faster, we save X salary dollars.” But the actual equation includes infrastructure costs, quality management overhead, security and compliance systems, and the opportunity cost of shadow adoption. More often than not, the tools are the smallest line item. In fact, developer velocity increase thanks to AI usage come with higher code churn and instability.

Forward-thinking organizations are making infrastructure investment the prerequisite for tool adoption rather than the response to tool problems. They’re asking: Do we have real-time visibility into AI usage? Can we provision approved tools in hours? Do we have automated quality gates? Have we built cost management systems? Do we have governance frameworks that balance velocity with compliance?

Only when those infrastructure capabilities exist does tool adoption make strategic sense. This reverses the typical sequence. Instead of adopting tools and scrambling to build infrastructure reactively, organizations build infrastructure first and adopt tools deliberately.

The evidence suggests this approach works. Organizations with mature platform capabilities achieve 15-55% productivity gains from AI adoption and 30-83% cost savings through consolidated management.

As AI capabilities accelerate and new tools emerge monthly, the infrastructure gap will likely widen for organizations that don’t invest deliberately. The window for building enabling infrastructure before the next technology wave is narrowing. Companies that recognize infrastructure as strategic investment rather than operational overhead will capture disproportionate advantage. Those that continue treating infrastructure as an afterthought will likely discover, repeatedly and painfully, that cheap tools create expensive chaos.

The pattern is clear. The window is closing. The choice is now.

References

- Google Cloud DORA (DevOps Research and Assessment). “Accelerate State of DevOps 2024.” Google Cloud, 2024.

- Google Cloud DORA. “2025 State of AI-Assisted Software Development.” Google Cloud, January 2025.

- IBM Security. “Cost of Data Breach Report 2024.” IBM Corporation, July 2024.

- Shrikanth, Nick and Omri Zur. “Coding on Copilot: 2023 Data Suggests Downward Pressure on Code Quality.” GitClear, January 2024.

- Stack Overflow. “2025 Developer Survey.” Stack Overflow, May 2025.

- Stanford Institute for Human-Centered Artificial Intelligence. “The 2025 AI Index Report.” Stanford HAI, March 2025.

- METR (Model Evaluation & Threat Research). “Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity.” METR, July 2025.

- The GenAI Divide STATE OF AI IN BUSINESS 2025. MIT Nanda.

- Cloud Native Computing Foundation. “Platform Engineering Maturity Model.” CNCF, 2024.

- Lopa, Maisha. “Product Lessons from ML Home: Spotify’s One-Stop Shop for Machine Learning.” Spotify Engineering Blog, January 19, 2022.

- Samsung Electronics data leak incidents, April 2023. Multiple news sources including Bloomberg Technology, Reuters Technology, and industry analysis reports.

Methodology and Sources

This article synthesizes data from multiple authoritative research studies conducted between 2024-2025:

Primary quantitative sources:

- Google DORA (DevOps Research and Assessment), “Accelerate State of DevOps 2024” and “2025 State of AI-Assisted Software Development” (90,000+ respondents globally)

- IBM Security, “Cost of Data Breach Report 2024” (industry-wide breach cost analysis)

- GitClear, “Coding on Copilot: 2023 Data Suggests Downward Pressure on Code Quality” (analysis of 211 million changed lines of code, 2020-2024)

- Stack Overflow, “2025 Developer Survey” (65,000+ developers)

- Stanford HAI, “2025 AI Index Report” (global AI investment and adoption tracking)

- CNCF (Cloud Native Computing Foundation), Platform Engineering Maturity Model surveys

- MIT/METR (Model Evaluation & Threat Research), “Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity”

Case studies:

- Spotify and Airbnb platform transformations: From published engineering blog posts and conference presentations

- Samsung Electronics incident (April 2023): Based on widely reported public incidents and subsequent analysis.

- Platform engineering success and failure cases: Direct interviews with CTOs and platform engineering leaders conducted between August 2024 - September 2025, with permission granted for anonymized use.